Questo articolo è disponibile anche in lingua italiana al seguente link: Microsoft Sentinel + Azure Arc: attivare la raccolta degli audit log – WindowServer.it

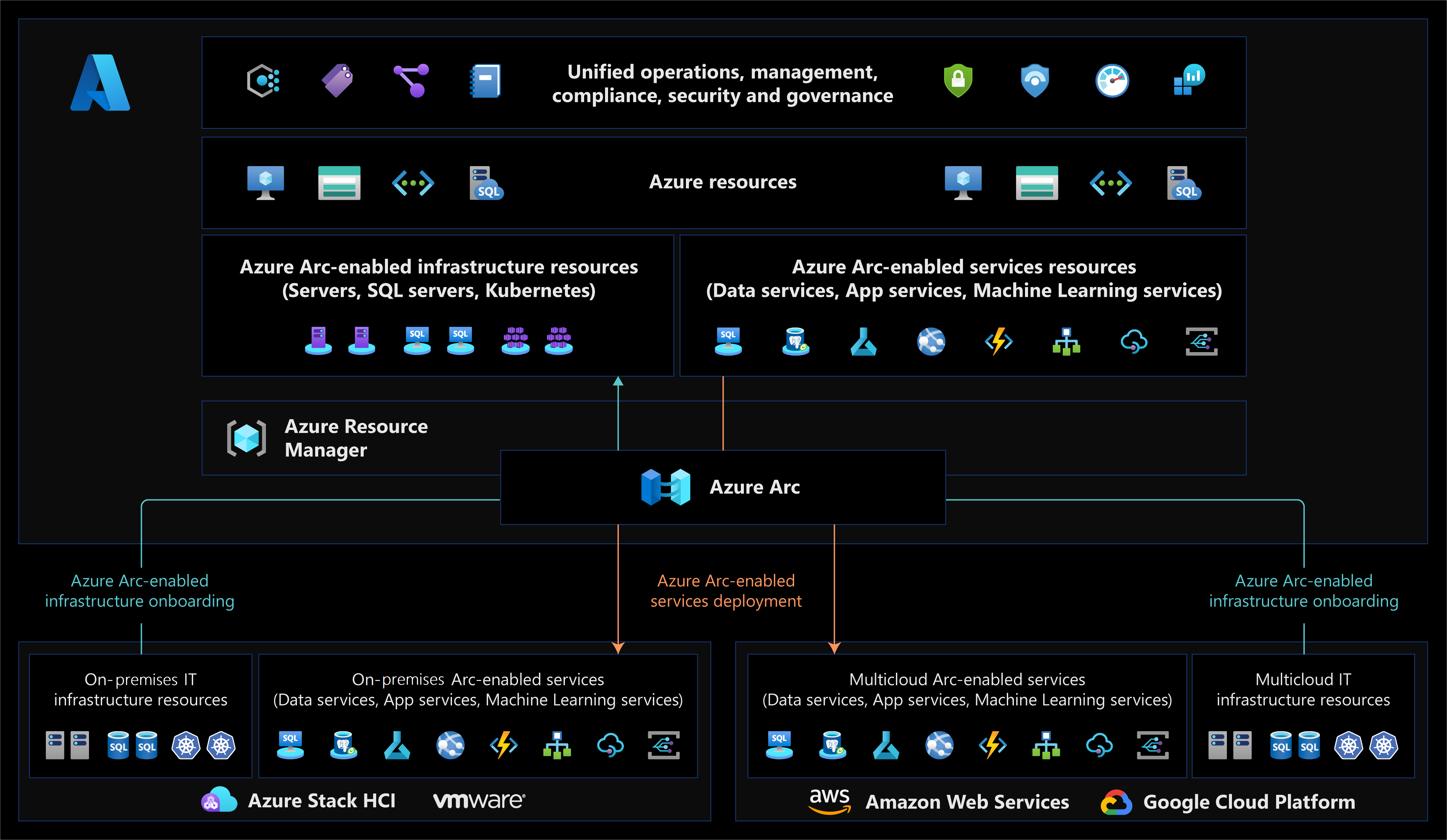

Microsoft Sentinel is the SIEM-SOAR platform that helps keep our infrastructure under control, whether it is on-premises or on the cloud, on Microsoft Azure or on AWS, in domain or workgroup and, clearly regardless of whether it is Windows or Linux. Thanks of over 300 connectors, it is possible to receive all kinds of information on what is happening to our resources; for example, we can know if a malicious file has been detected on our file server, thanks to the integration with Microsoft Defender XDR, or know if our storage account has been compromised, thanks to the integration with Microsoft Defender for Cloud.

In short, a myriad of sensors that send information in order to have a full-fledged SOC platform. Microsoft Sentinel analyzes the information found within a Log Analytics Workspace, a database that hosts everything that is loaded by the connectors, but which can be used to collect logs of its own applications.

To give a little history, Log Analytics in the past was Operations Management Suite (before that, System Center Advisor) and can be considered the father of Sentinel, as it was possible to collect events and performances in order to make queries, generate alerts and everything you need to monitor and manage your infrastructure.

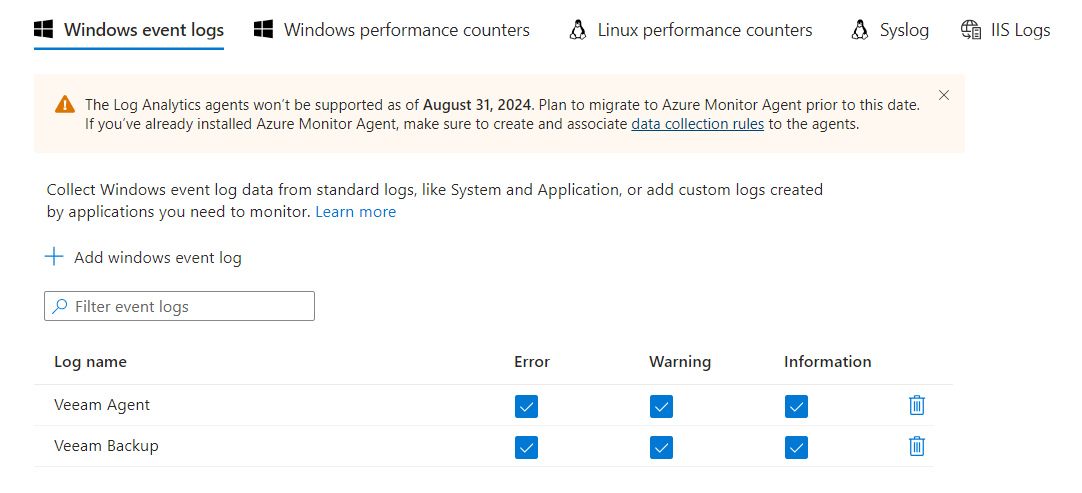

With the arrival of Sentinel, in a first phase, the solution was never seen very well as an auditing product and this is because in the past the integration, for sending data, was done through the Microsoft Management Agent (MMA) a derivative of the agent present in System Center Operations Manager which, in a very flat way, took all the data and sent it towards its data collection gateway – Log Analytics precisely – without distinction.

The impossibility of being able to choose what to collect, and for which objects, had two impacts: large amount of data collected (sometimes not useful), and enormous workspace costs.

Time passes, the project evolves, Log Analytics becomes only a database, Sentinel becomes a SIEM-SOAR and, thanks to the introduction of Azure Arc, comes the leap in quality.

Designed for much broader purposes than monitoring, Azure Arc uses much more intelligent integration logic but, above all, for the collect part it uses Azure Monitor Agent (AMA). AMA uses Data Collection Rules, where you define which data you want each agent to collect. Data collection rules let you manage data collection settings at scale and define unique, scoped configurations for subsets of machines. Azure Monitor Agent provides a variety of immediate benefits, including cost savings, a simplified management experience, and enhanced security and performance.

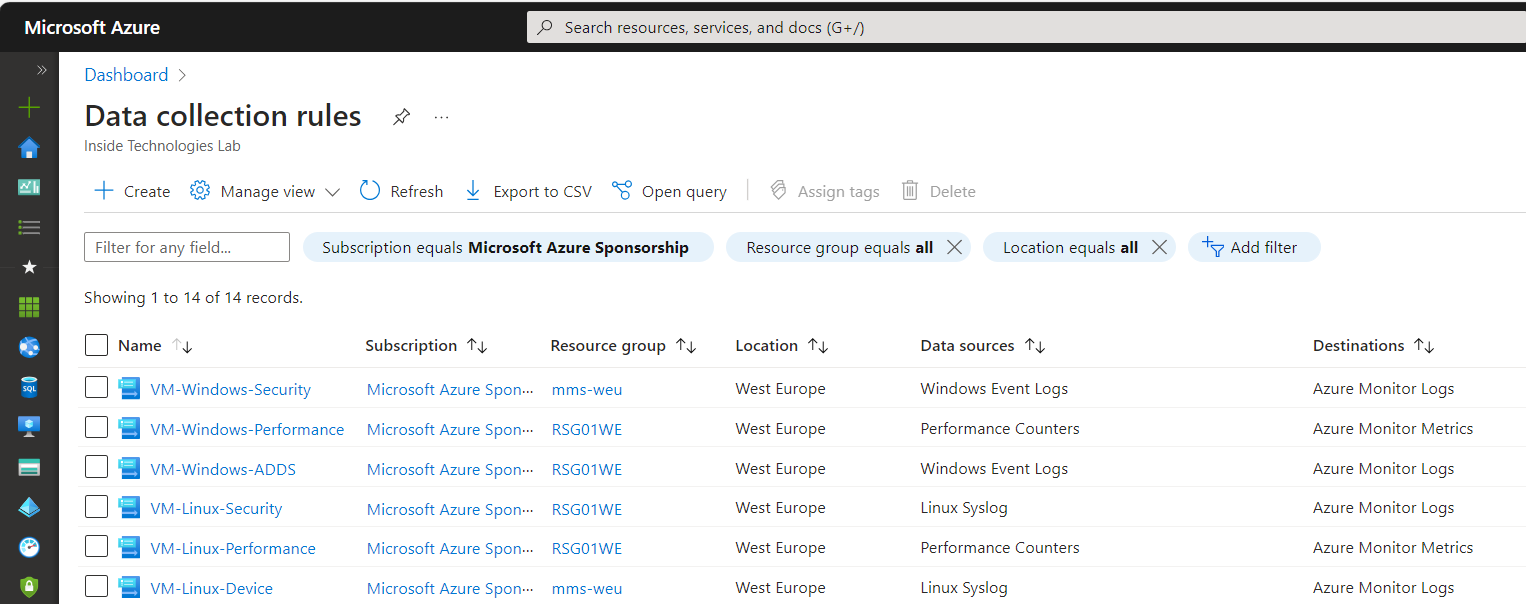

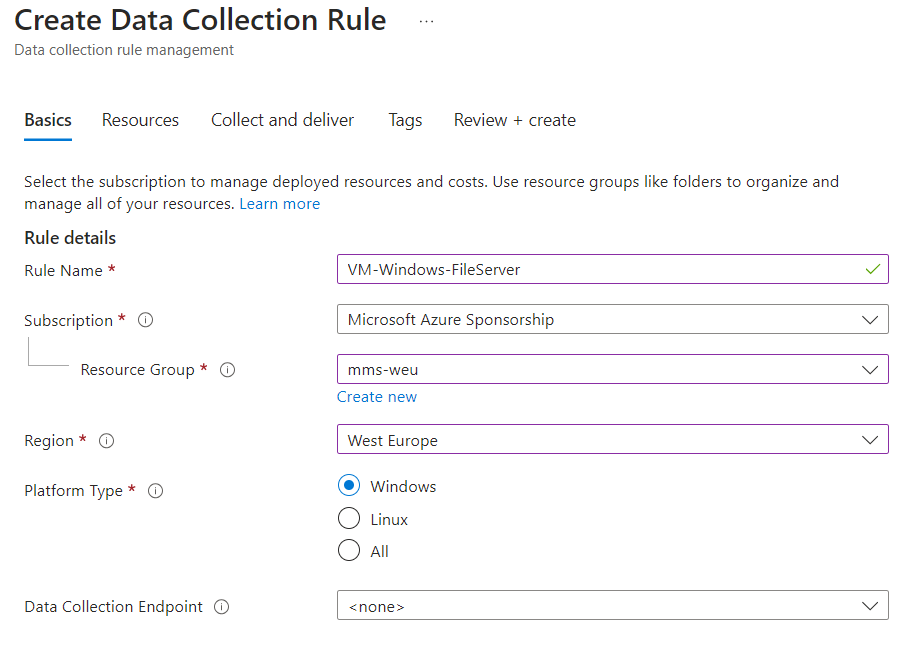

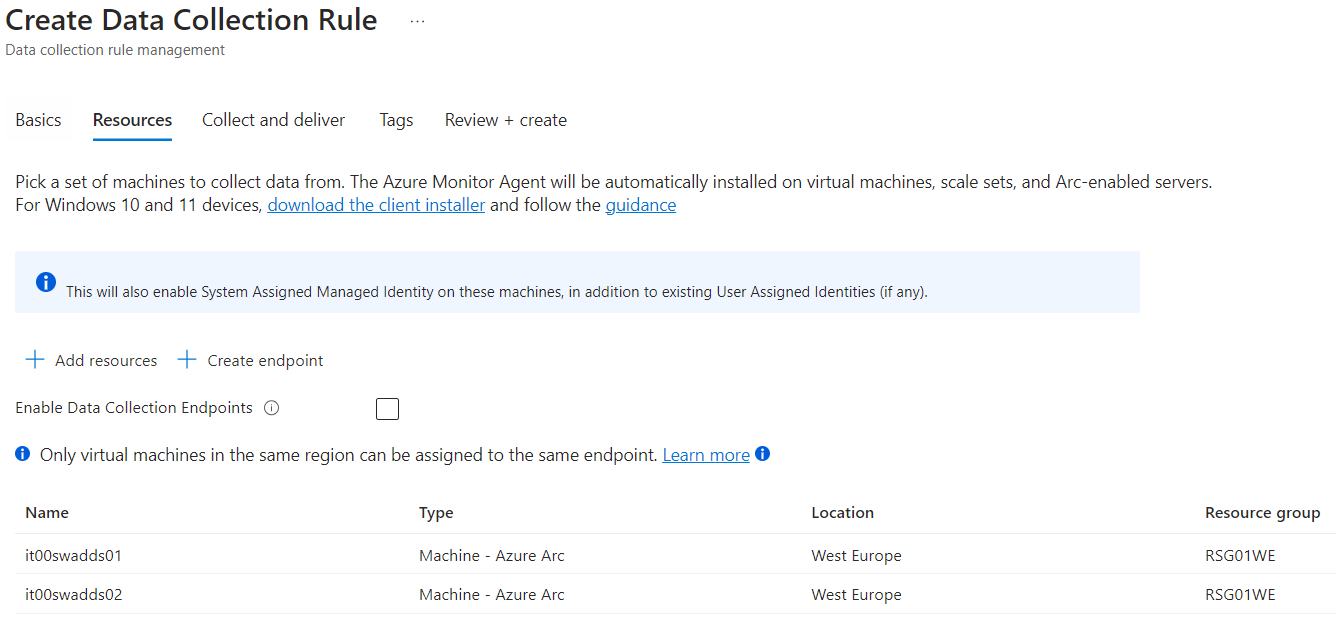

Data Collection Rules Configuration

Data Collection Rules are created within Microsoft Azure. On the main screen you can immediately notice the difference with the past, in which it was not possible to make different rules for servers or log collection methods.

The only precaution to use is to immediately know what you want to do and, even more important, to use a Naming Convention for the objects, so as to immediately understand what that specific rule does.

The resources to be connected are equally important based on how you set the information capture logic (e.g. if you want to capture system logs, choose only the Domain Controllers).

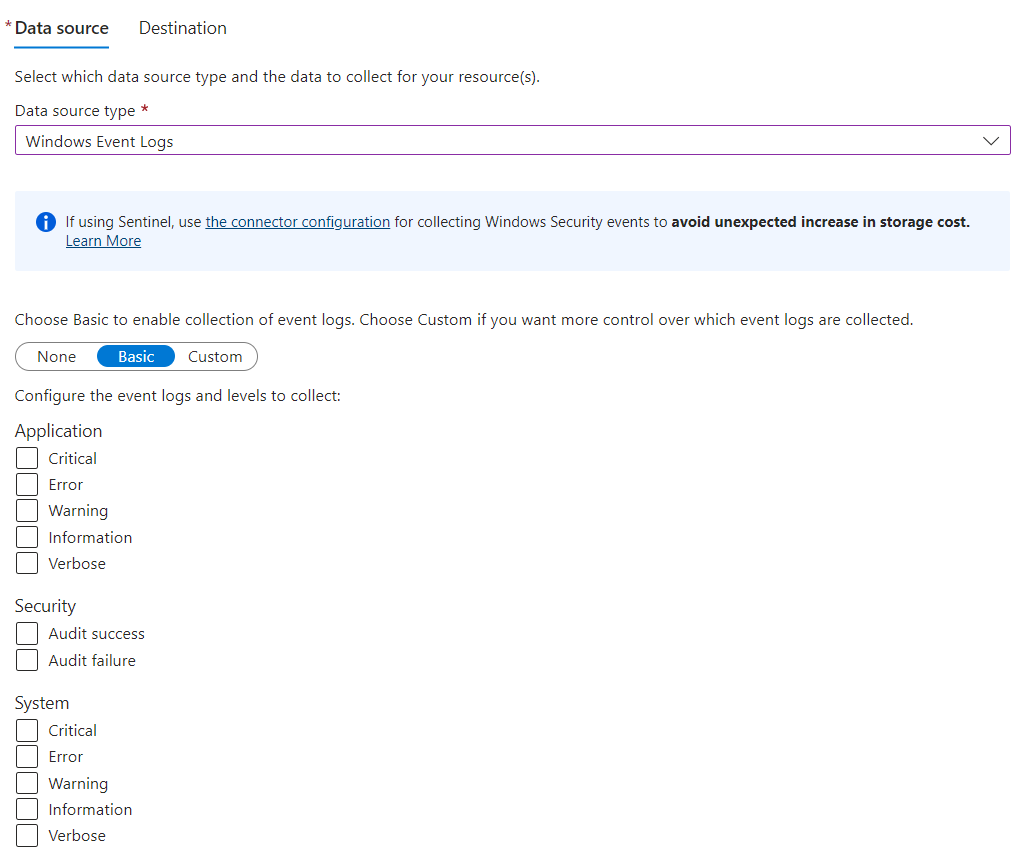

The last step is to choose the Data Source, i.e. what to collect at the Windows Event Logs level. If we wanted to collect access logs, we should select Audit Success and Failure.

What if I want to add a server or change how data is collected? The operation is very easy and consists of editing the DCR created, adding/removing servers or modifying the scope of the elements to capture.

Log Analysis

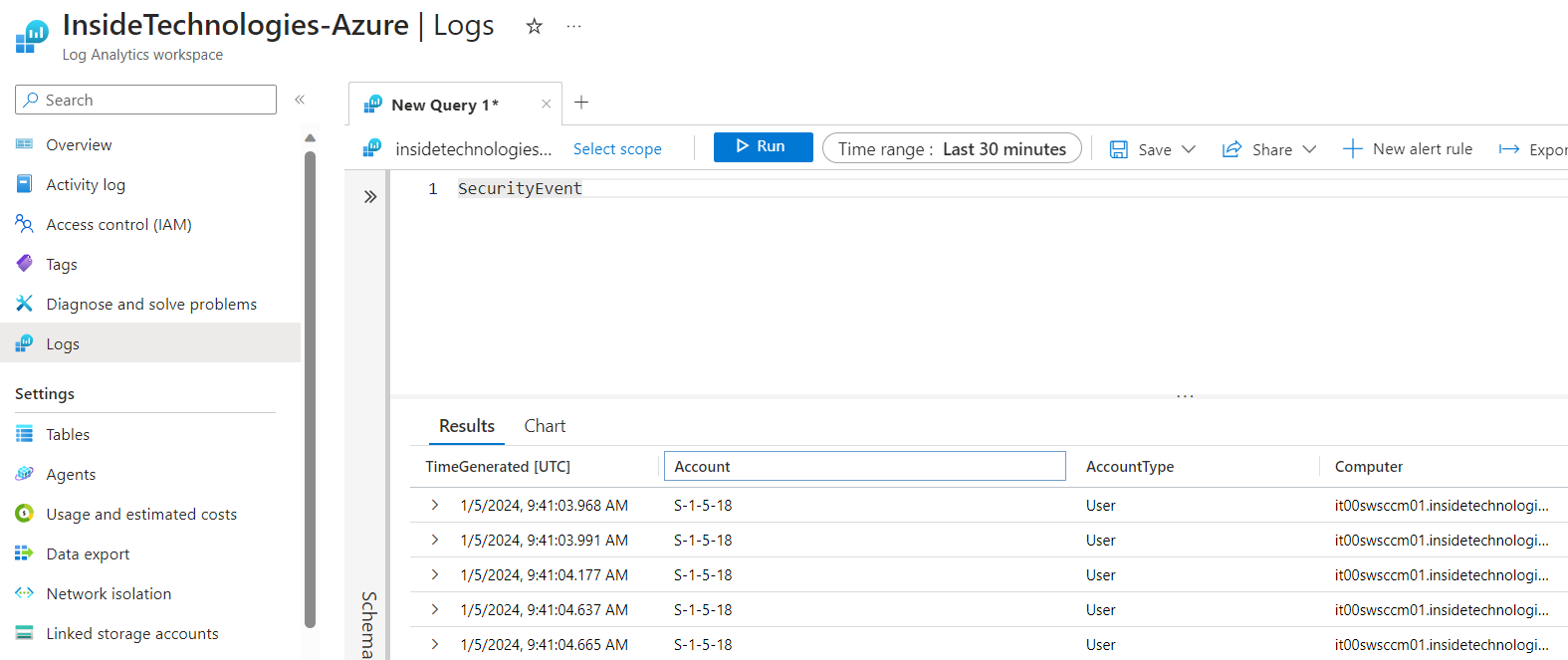

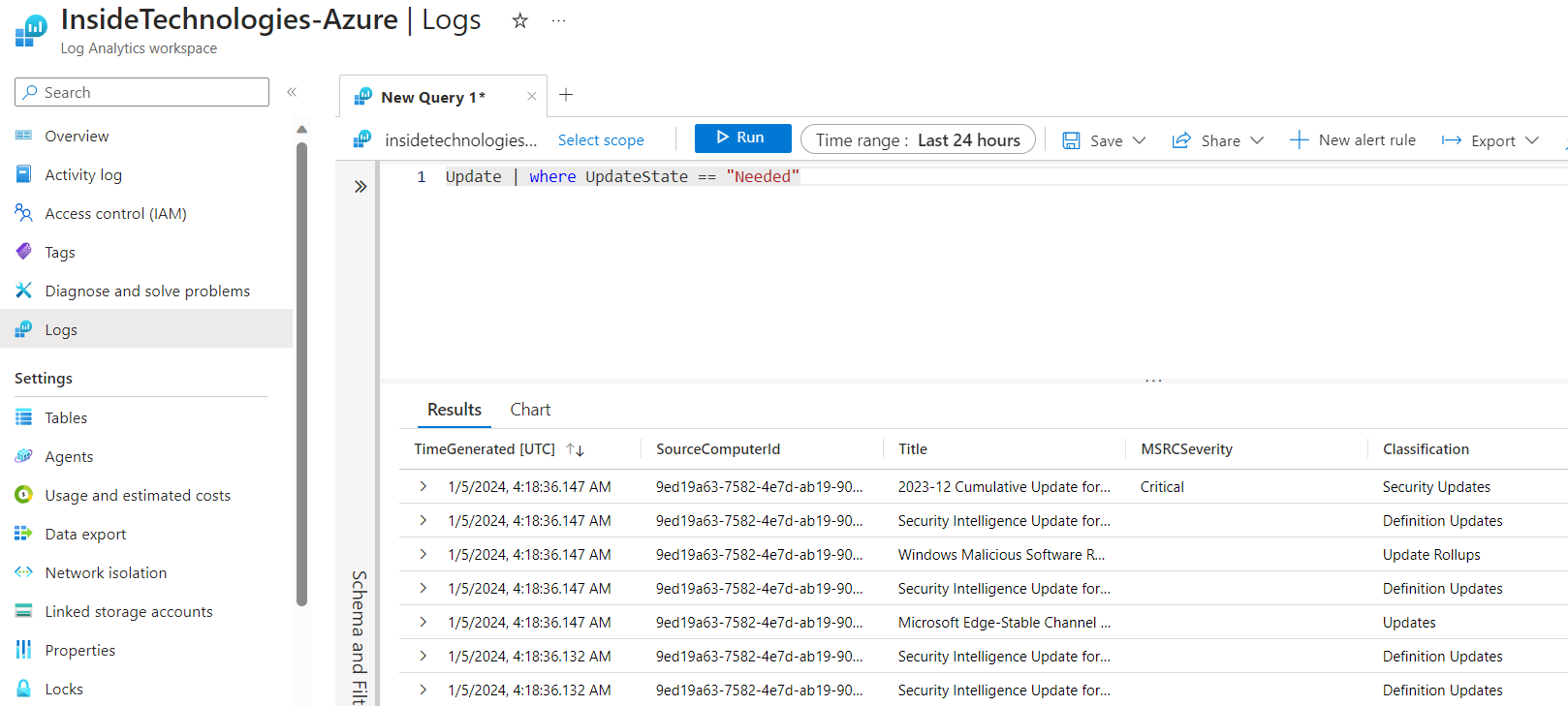

Log analysis must be done via Log Analytics using the Kusto Query Language (KQL), which not only allows you to extend the search, cross-referencing multiple sources, but also transforming the results into graphically formatted tables. If you want more information on this topic, you can refer to this article – Kusto Query Language (KQL) overview – Azure Data Explorer & Real-Time Analytics | Microsoft Learn.

When carrying out a query, you must always start from the search scope: in the case of security events, the SecurityEvent class is called.

To see the status of the updates to be installed on the servers, the Update class is called.

Clearly these are very basic examples, but through KQL it is possible to do many things in a super detailed and complete way.

Integration with Microsoft Sentinel

But how does Sentinel fit into all this? Let’s start from a very important point, namely that Microsoft Sentinel is not needed to collect system logs or to know if there are critical events on our servers, because this is done by Azure Arc and Log Analytics. However, Sentinel is important because thanks to the Data Connectors and Azure Workbooks, probes can be easily activated to capture suspicious events, which can trigger alerts or remediation.

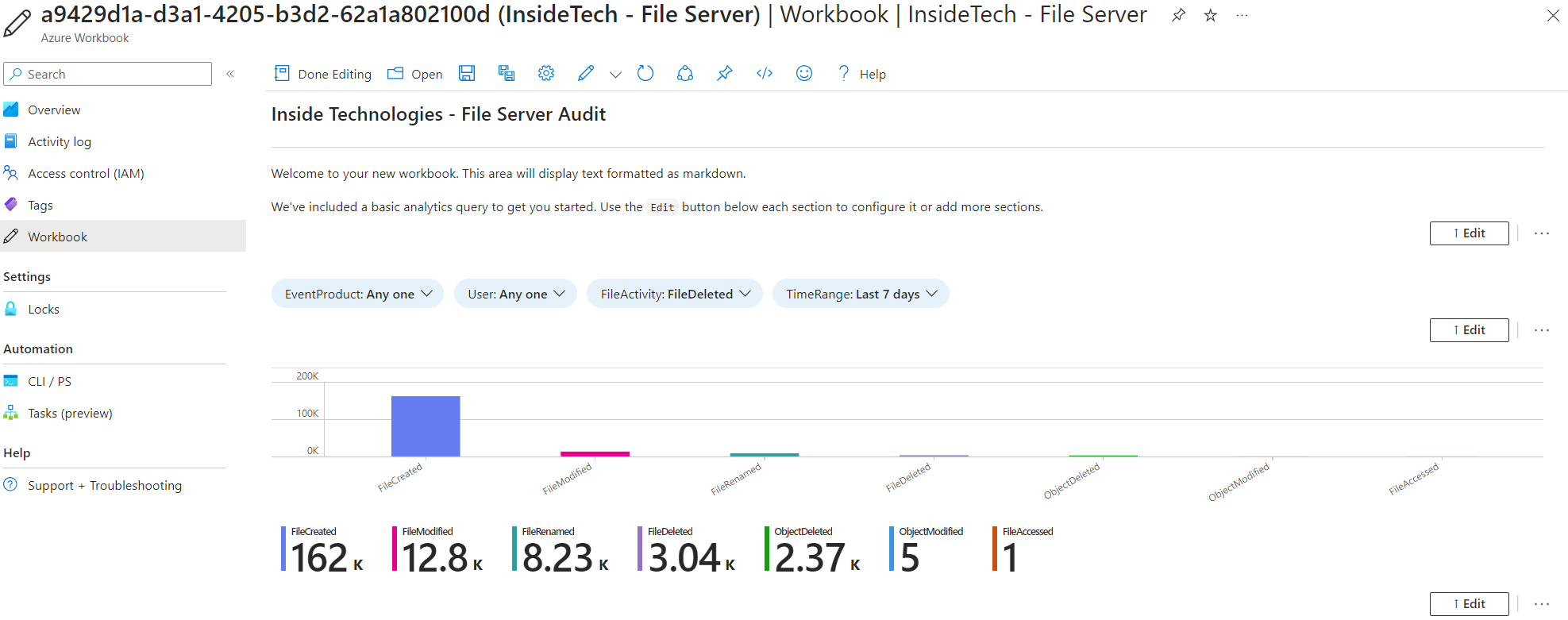

Azure Workbooks are also external to Microsoft Sentinel and can be created to put the visualization of a specific scope in a single area – for example if we wanted to have a view of our file servers, discovering the major activities, the most modified files or the most active users.

It is no coincidence that Azure Workbooks are nothing more than queries made in KQL and placed in a graphically different way.

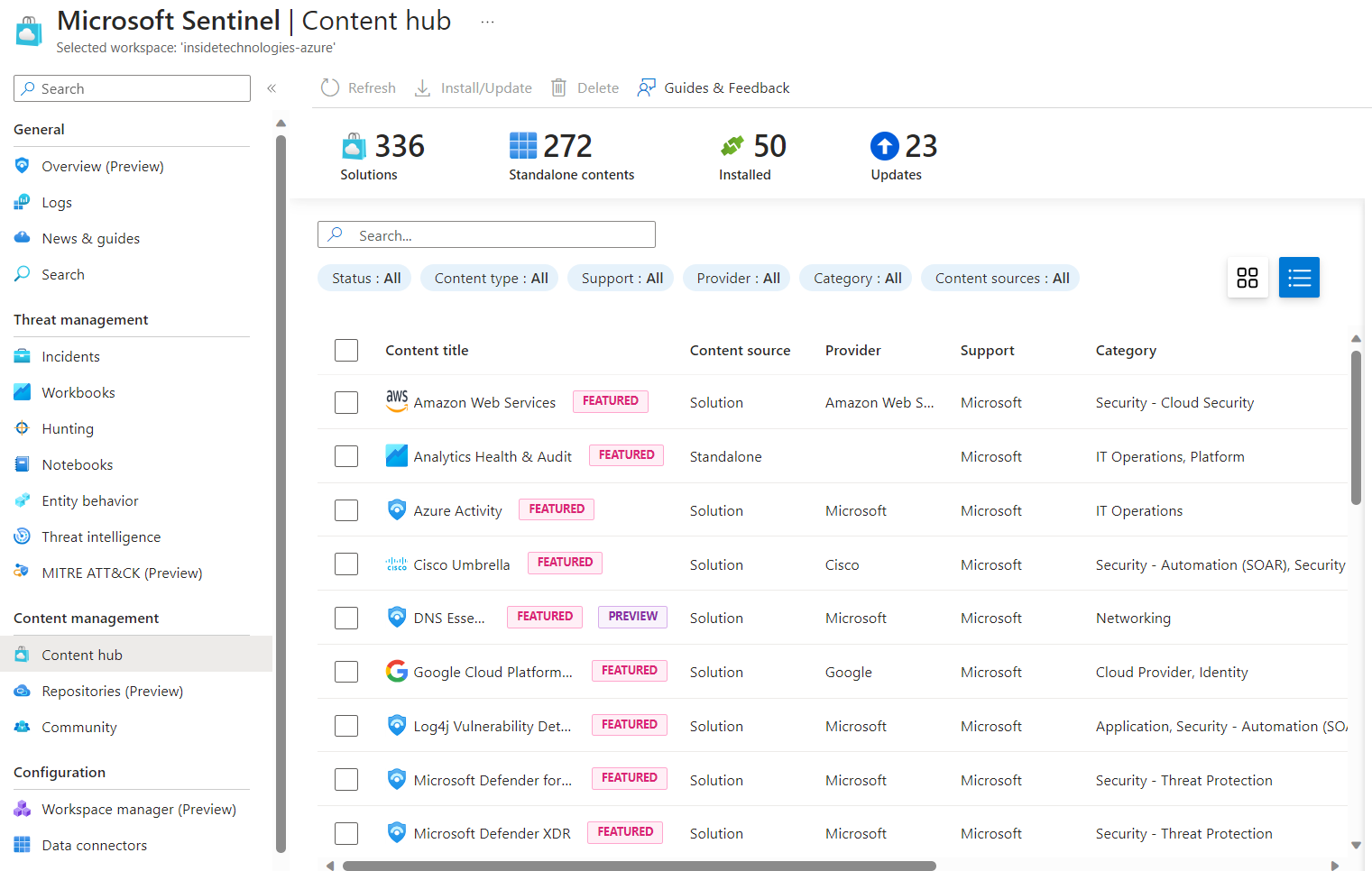

The Content Hub, in Microsoft Sentinel, is certainly the starting point for importing not only Workbooks but also Analytics Rules, necessary to intercept anomalous activities – the latter can be activated even without the presence of Workbooks.

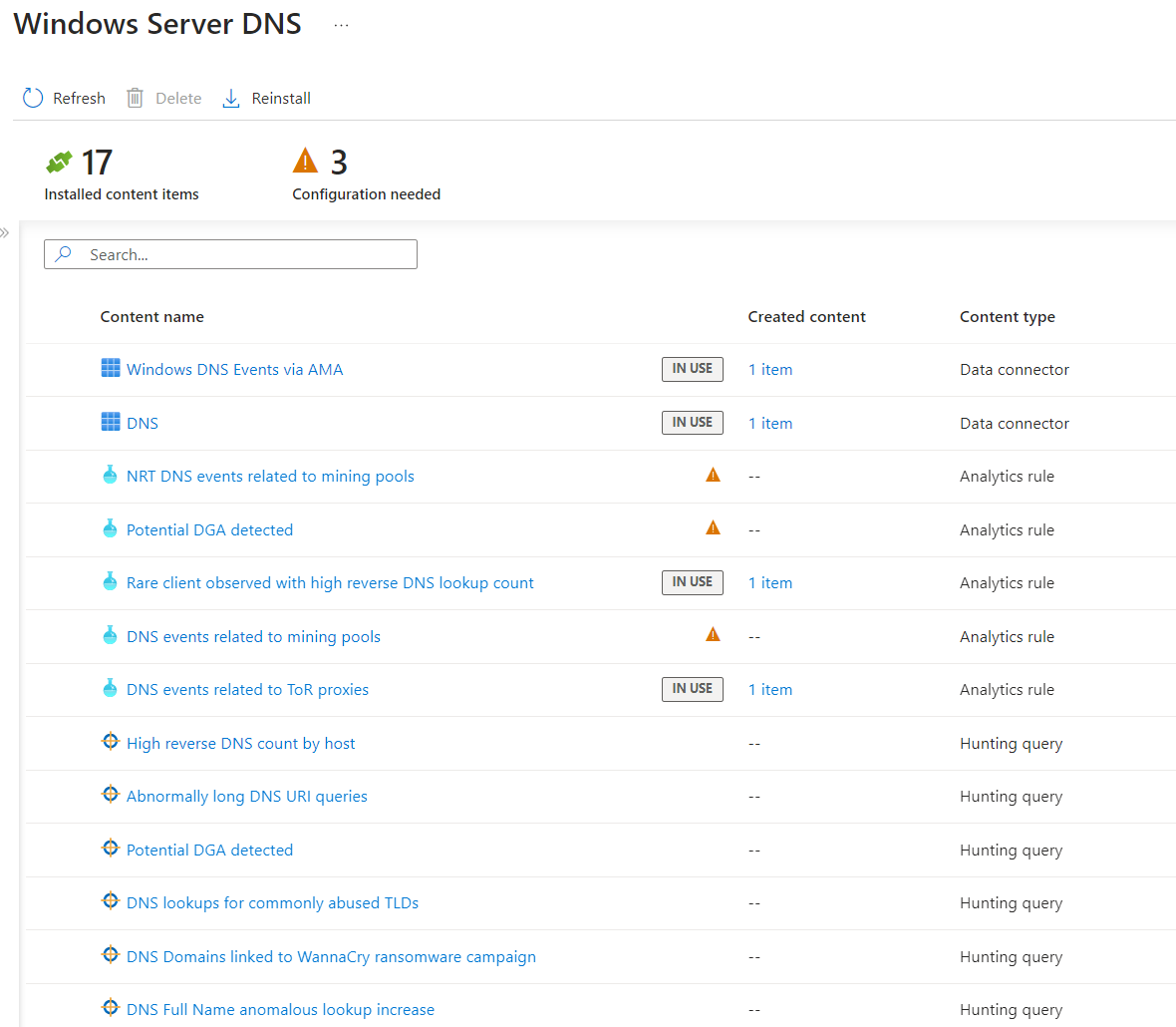

For example, the solution called Windows Server DNS helps us understand if malicious DNS queries are performed within our infrastructure or if atypical internal/external scans are performed. Through the Content Hub, it will not only be possible to easily activate the Workbooks but also the Hunting Queries necessary to detect threats. The only things not active by default are the Analytics Rules which will instead have to be unlocked manually – this is to avoid a flood of alarms and to make the user aware of what they are activating.

Conclusions

The use of Azure Arc is certainly fundamental to extend the management of your infrastructure and to be able to make the most of the various solutions offered by the cloud, such as log collection or centralized server updating. In the next articles we will see in more detail how to build some log capture logic for the most common scenarios in companies.